Stable Diffusion Models Study Guide: Hypernetwork & VAE

Next up, let's delve into the Hypernetwork model and VAE model.

Table of Content

Hypernetwork

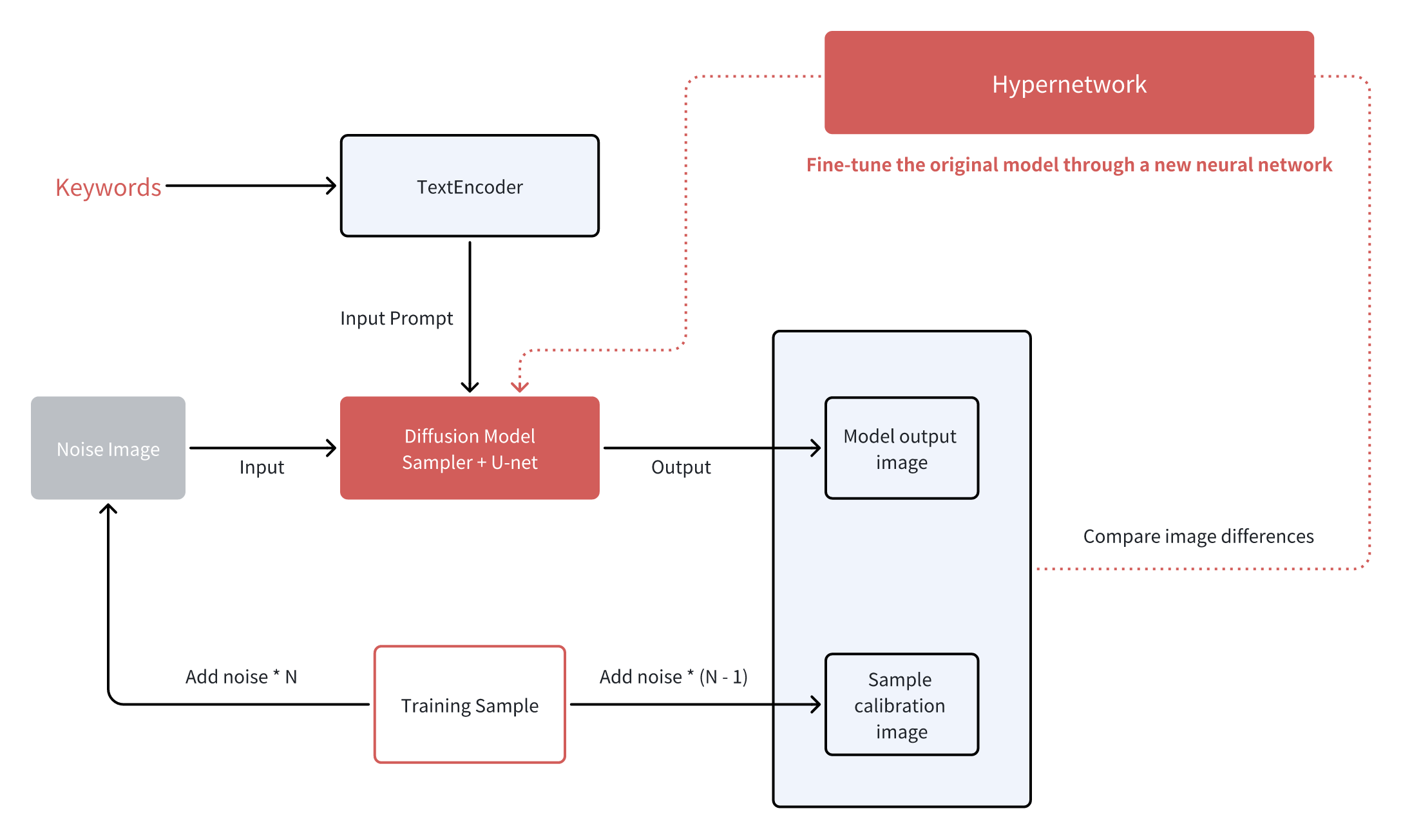

The hypernetwork model is built by adding a new neural network outside of the diffusion model and is used to adjust the model parameters. The hypernetwork model is also referred to as a "super network."

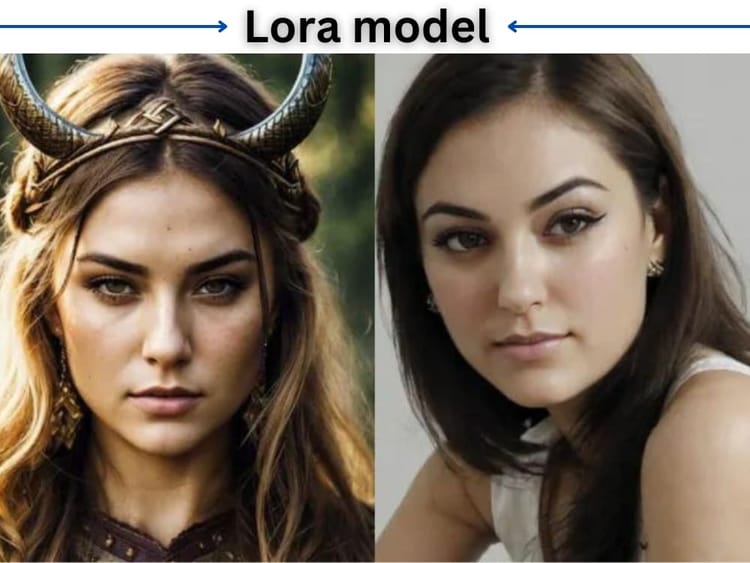

Unlike the LoRA model, the Hypernetwork model does not require a complete retraining of the original model. The model size is typically in the range of a few dozen to a few hundred MB.

Its actual effectiveness can be understood as a toned-down version of LoRA. The name sounds impressive, but it has not garnered much buzz online. As LoRA gradually gains prominence, it is replacing the Hypernetwork model in China on most occasions.

VAE

Finally, we have the VAE model. As we briefly introduced in the introductory guide, its job is to regenerate image info in the latent space and restore it to normal illustrations. As a part of the CKPT model, the VAE model does not exert control over the drawing content like the previous models but is dedicated to image correction.

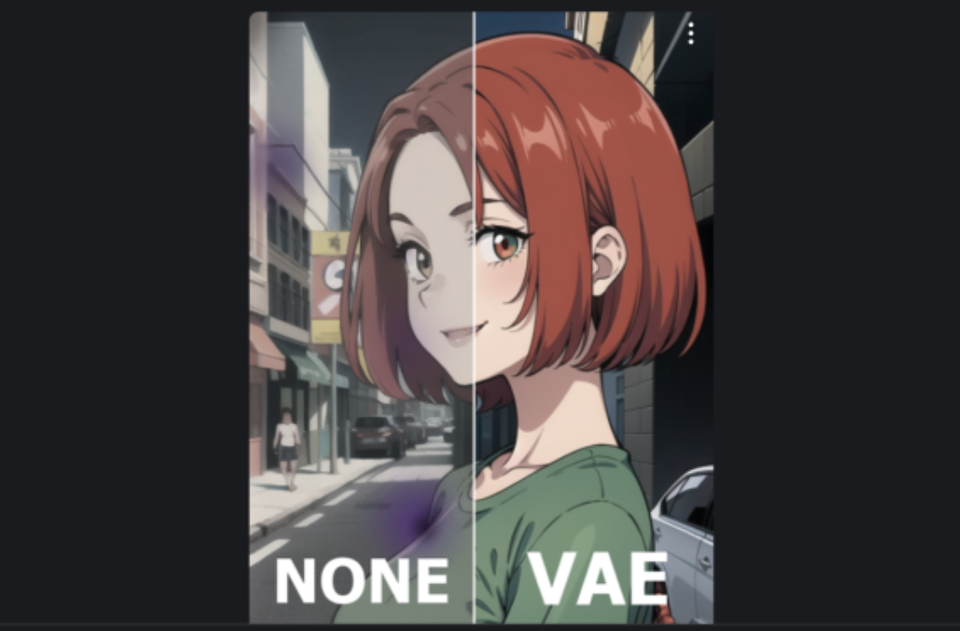

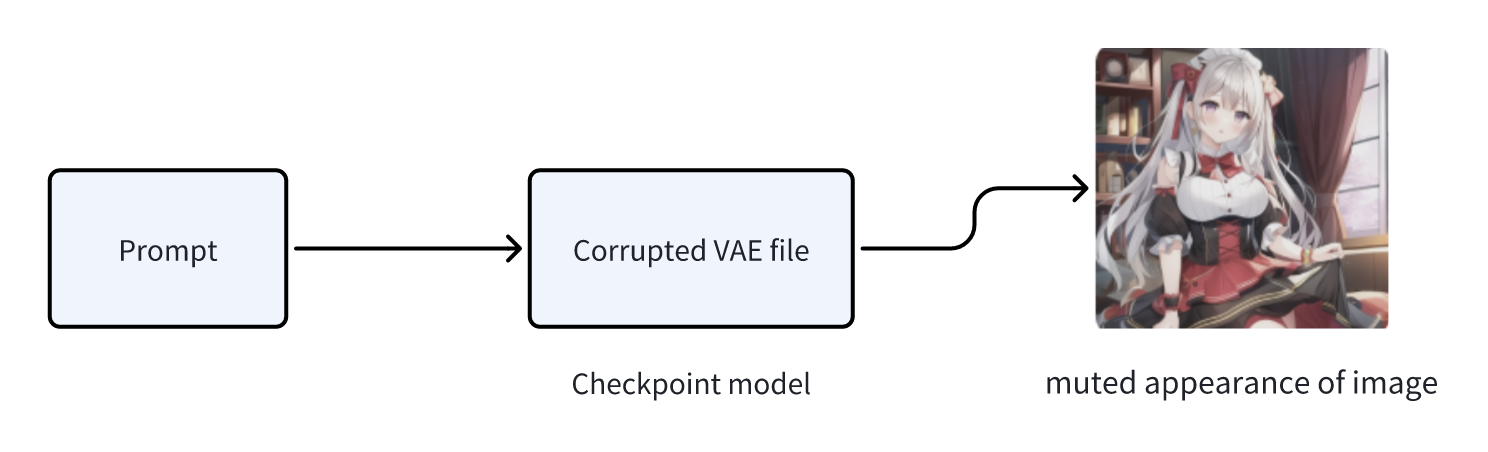

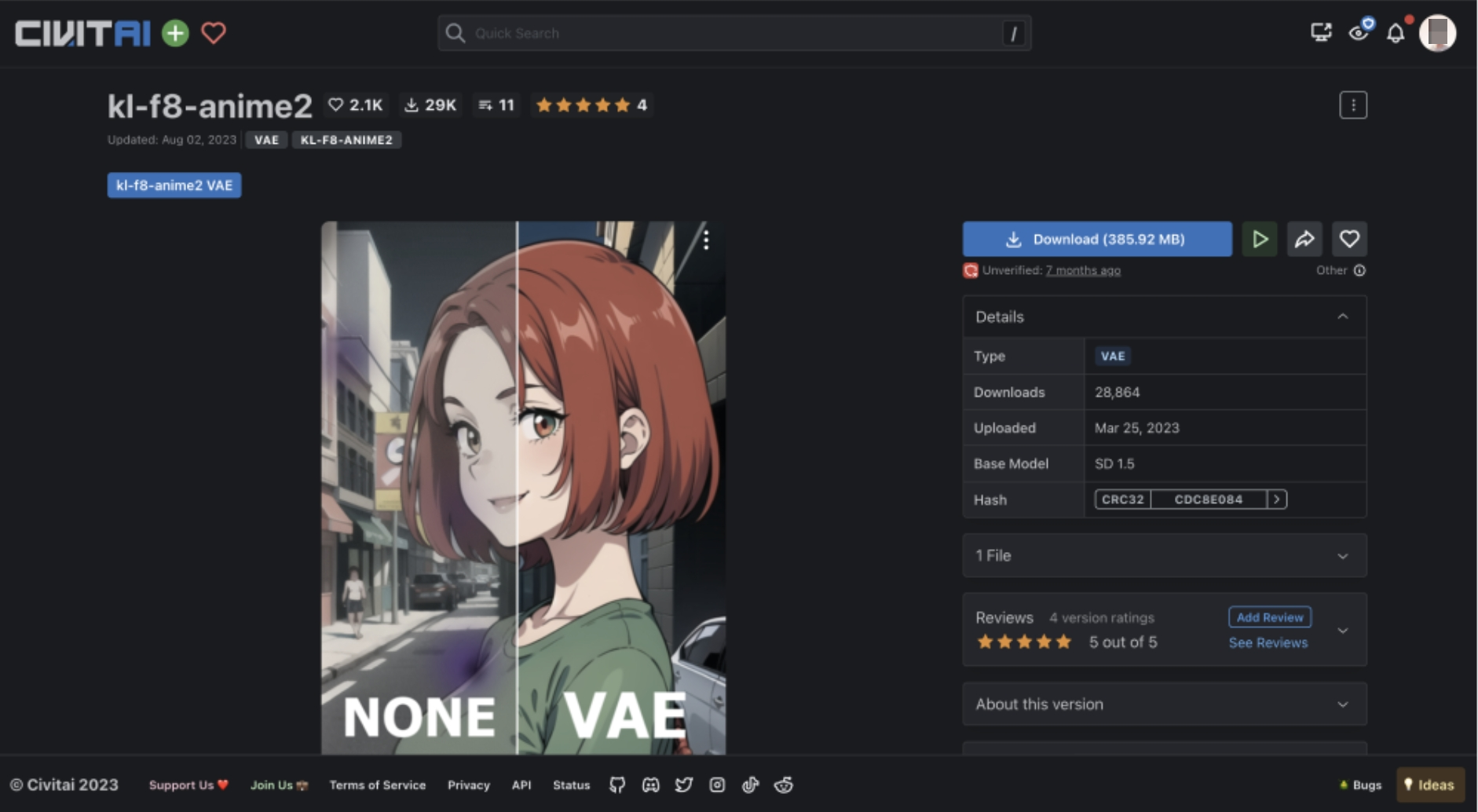

When we share CKPT models online, sometimes the images will appear muted and grayish in tone. When the VAE model is applied, it helps restore image colors and contrast for better visual appearance.

The actual cause behind the muted appearance of images is the damage to the VAE file within the base CKPT model. During the process of regenerating the image from the latent space, there can be missing image information, giving rise to the grayish tone. Many models in the community have been derived by fusing popular models.

If the VAE file in the initial model has any issues, it will lead to the same problem of image greyness in the merged model. One notorious example is the Anything4.5 hybrid model.

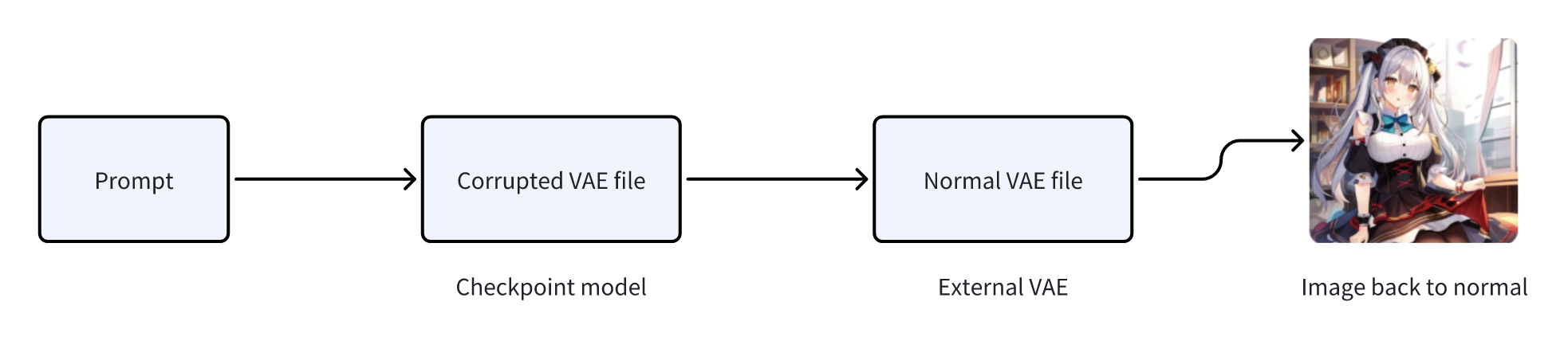

When encountering such situations, the VAE needs to be rectified to restore the base model to normal. However, it is a technical activity to remedy every model, which may incur high costs. To overcome this issue, the WebUI provides an external VAE option. By selecting a working VAE model while generating an image, the internal damaged VAE within the base model will be ignored in favor of the external model, which is the actual reason behind the restoration of image color.

However, switching to an external VAE is not a long-term solution. Some models may present blurry images or irregular line patterns after mounting external VAE. Also, people may rename community-provided VAE with different file names when using it in their model.

It creates a waste of resources as many duplicate VAE files will be downloaded by the community. One way to tackle the issue is to check the Hash values of each model in the Ai-Autumn launchpad. It's similar to a model's identities, and different hash values indicate different files.

Fortunately, in the community of CIVITAI, most of the newly trained CKPT models are usually equipped with properly functioning VAE. If a problem occurs in the VAE model, authors typically include a recommended VAE model in the introduction pages. There also exist reusable VAE models, such as the one included in the Ai-Autumn launchpad, namely, [kl-f8-anime2].

The VAE model is placed in \models\VAE. Because it is used to assist the Checkpoint large model, you can modify the VAE corresponding to the large model to the same name, and then check Automatic in the options, so that the VAE will be used when switching the Checkpoint model. It will automatically follow the transformation.